California’s Landmark AI Safety Law Raises the Global Bar

California has become the first U.S. state to require frontier AI developers to publicly disclose safety protocols and report safety incidents within 15 days. Governor Gavin Newsom signed Senate Bill 53 into law on September 29, 2025, marking a watershed moment in AI governance.

What Happened

The law applies to developers of large-scale AI systems, often referred to as “frontier models.” Companies must now:

-

Publish risk mitigation and safety protocols.

-

Report critical safety incidents within 15 days.

-

Share red-teaming results with regulators.

Why It Matters

Until now, most AI oversight has been voluntary. This law forces transparency in an industry notorious for secrecy. It parallels Europe’s EU AI Act and China’s AI draft rules, but sets a new U.S. precedent.

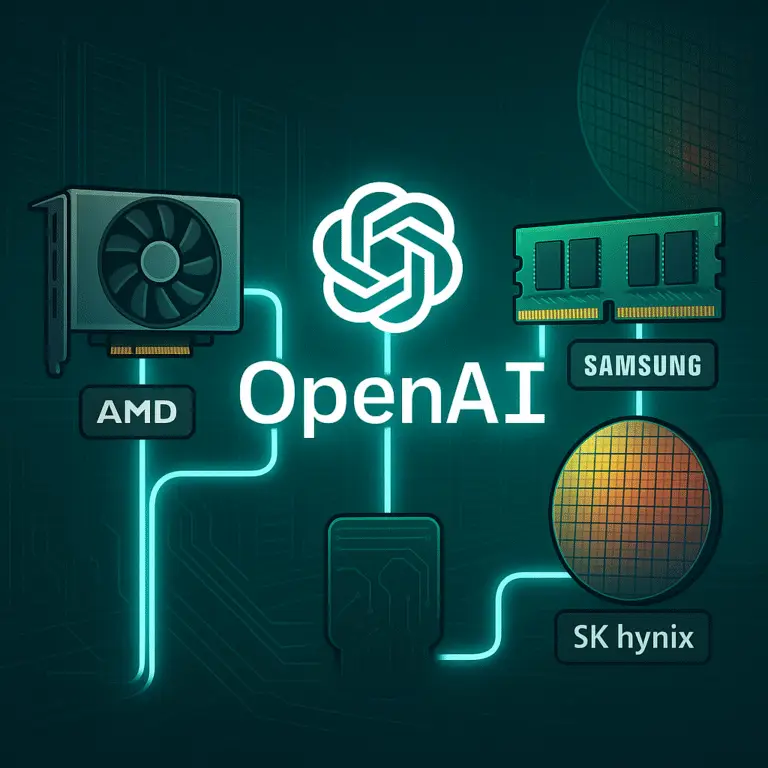

For companies like OpenAI, Anthropic, and Google DeepMind, compliance will mean more rigorous documentation — and potential legal exposure if they fail to report issues.

Future Implications

-

Other states may adopt similar frameworks, creating a patchwork of AI laws in the U.S.

-

Federal lawmakers may be pressured to unify regulation.

-

Public trust could improve if disclosures reveal strong safety testing — or erode if flaws are exposed.

👉 Takeaway: AI development is no longer a “move fast and break things” game. Safety and accountability are entering the spotlight.