The Great AI Energy Debate: Can Data Centers Stay Sustainable?

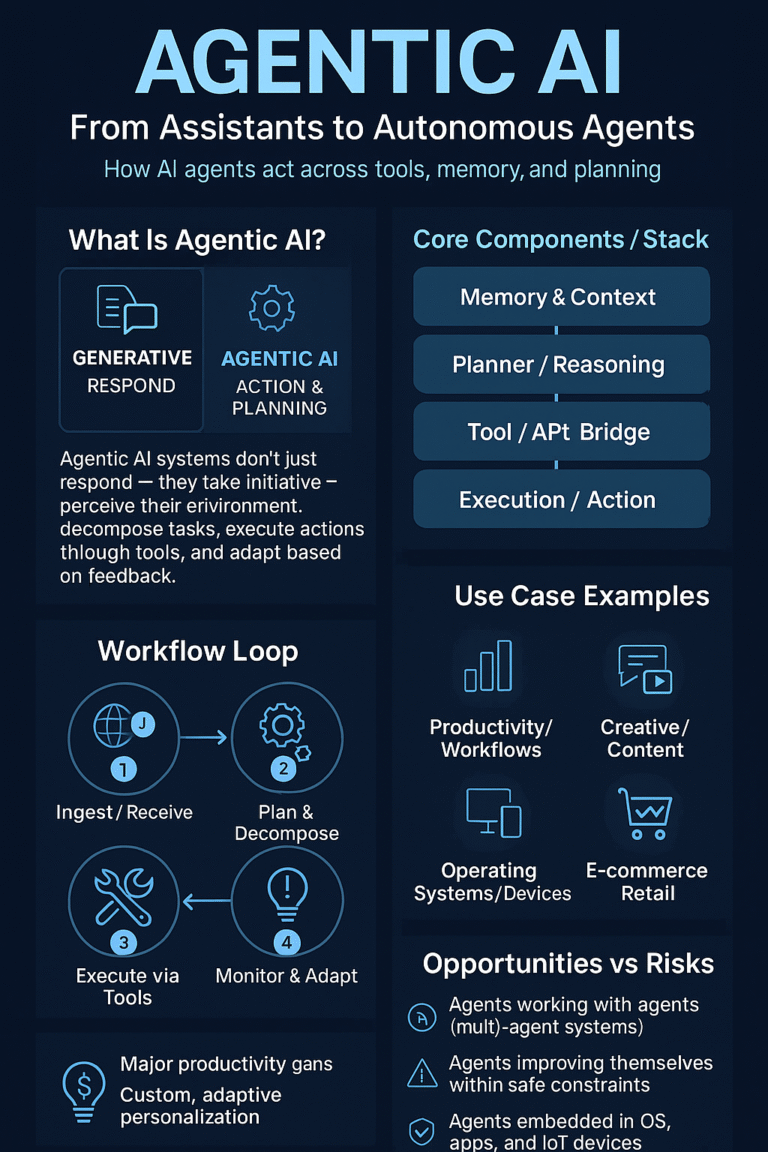

Every new AI model seems smarter — and hungrier. Behind every ChatGPT or Gemini lies a datacenter consuming gigawatt of power and millions of liters of water. The question haunting the AI boom: Can intelligence scale without burning the planet?

What’s Happening

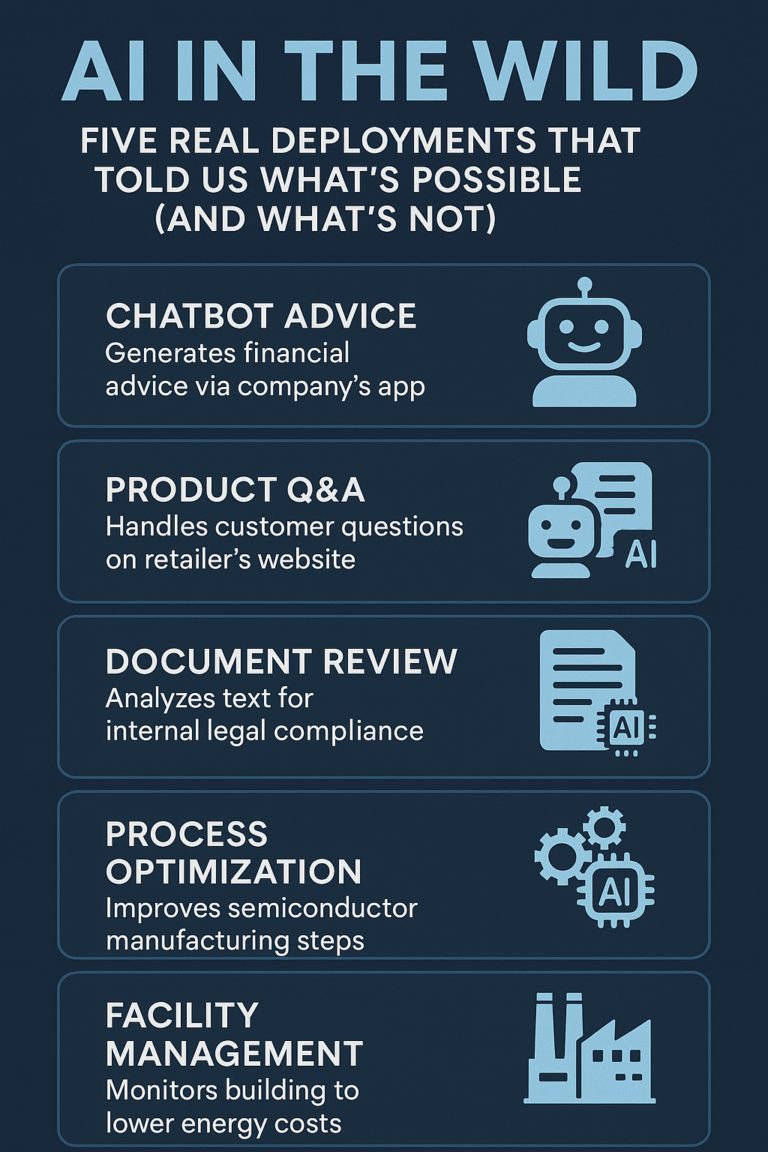

AI compute demand is exploding. Data centers now consume 4–5% of global electricity, up from 1% five years ago. Tech giants including Microsoft, Google, and OpenAI are scrambling to offset emissions with renewables.

- Microsoft’s Iowa AI center reportedly used 1.7 billion liters of water for cooling (2024).

- Google’s 2025 “Green TPU Clusters” use liquid immersion cooling to cut power draw by 22%.

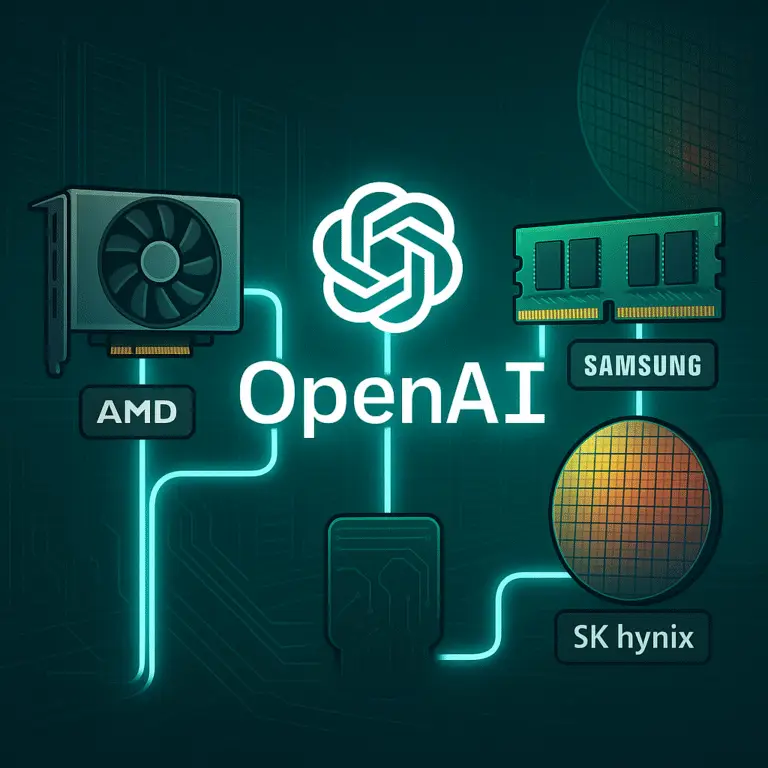

- OpenAI’s Stargate project aims to co-locate with hydroelectric grids to reduce carbon load.

Why It Matters

The sustainability debate isn’t theoretical — it’s existential. AI growth could soon exceed grid capacity in regions like Texas and Ireland.

- Policy pressure: The EU is drafting “AI Energy Disclosure Directives.”

- Corporate response: Amazon Web Services plans 100% renewable AI zones by 2026.

- Innovation push: Startups like Heata and Submer are repurposing AI heat for district heating systems.

“The AI boom won’t slow down — so sustainability has to catch up.”

What’s Next

- Green Compute Credit Markets will emerge to trade carbon-neutral AI power.

- Hybrid cloud deployments will optimize for both cost and energy.

- New hardware designs — optical chips and low-power AI cores — are in the pipeline.

- Public scrutiny will grow as AI usage enters mainstream politics.

Key Takeaways

- Data centers could consume 5–8% of global power by 2027.

- Renewables and cooling innovation are critical to AI’s future.

- Expect “energy efficiency scores” to become AI benchmarks alongside accuracy.

- The sustainability of intelligence is the defining tech question of the decade.