The Agentic AI Era Has Arrived — What It Means for Business & Society

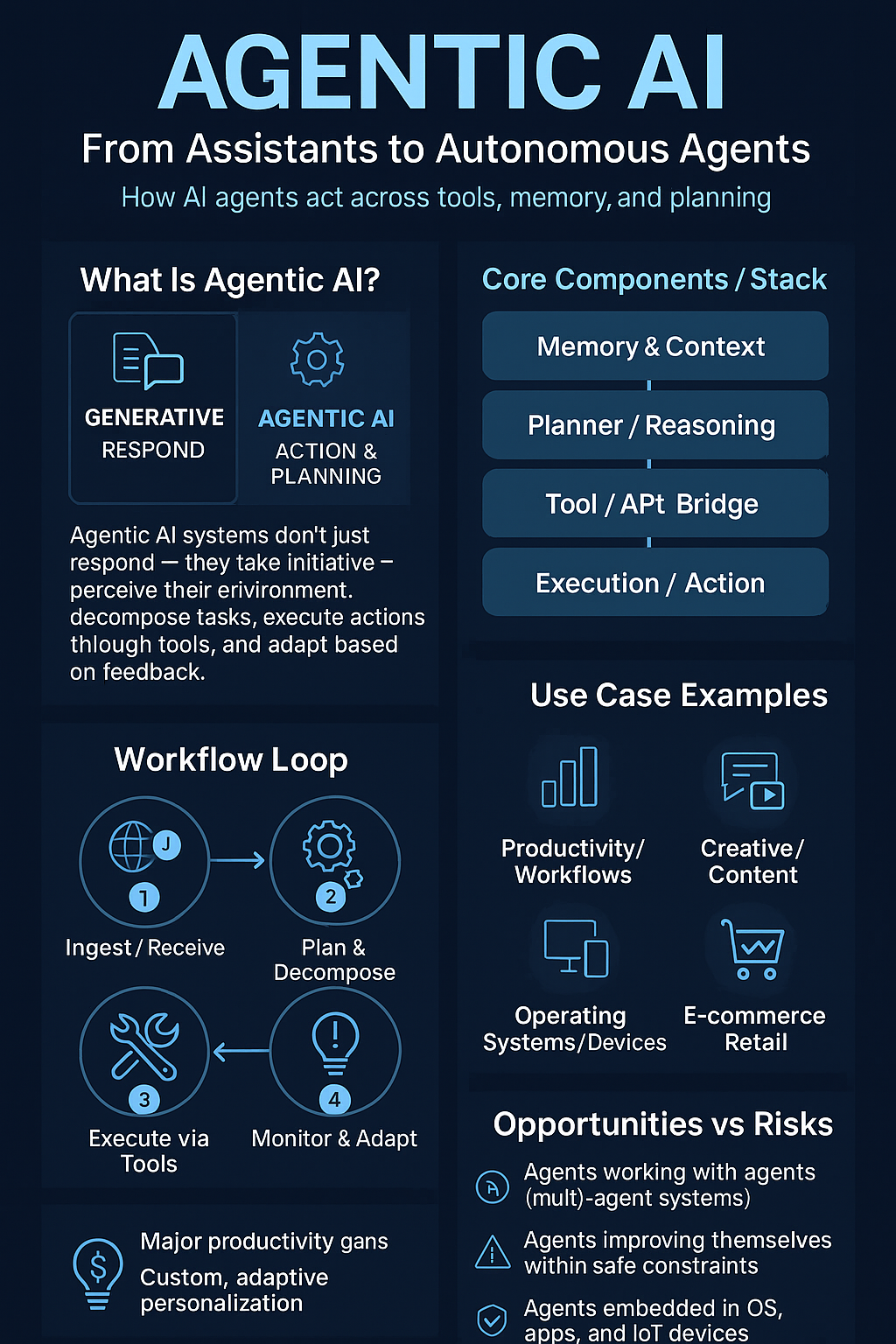

Over the past year, generative AI has propelled us forward — but in 2025, we’re entering the age of agentic AI, where systems don’t just respond but take initiative: reasoning, planning, executing tasks across environments. What was once hypothetical is now emerging across platforms, tools, and enterprises. In this article, we unravel what agentic AI means, the breakthroughs that enabled it, real-world applications, and the ethical and societal stakes we’ll need to manage.

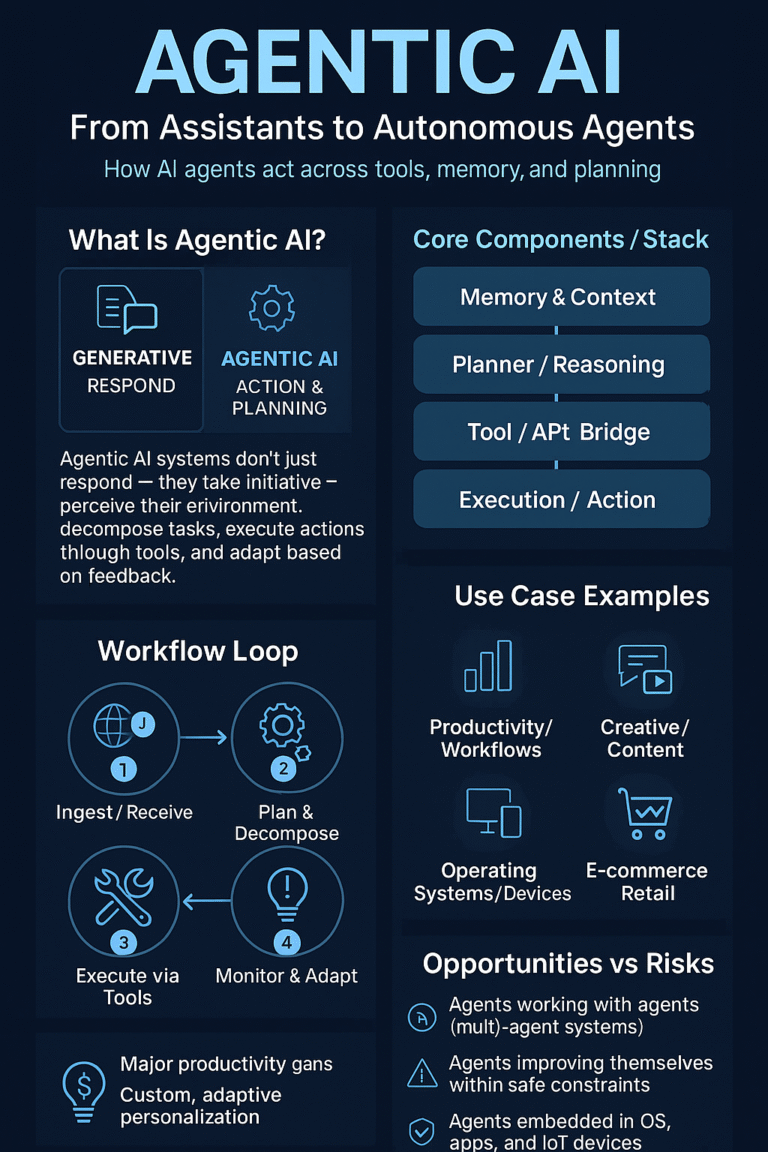

What Exactly Are AI Agents?

AI agents can be thought of as autonomous (or semi-autonomous) sub-systems that:

- Understand context or goals

- Break goals into multi-step plans

- Execute actions (via APIs, plugins, or environments)

- Monitor outcomes and adapt

Unlike pure chatbots or prompt-driven models, agents act — often across tools, applications, and workflows. They may perform research, schedule, automate cross-app tasks, and even monitor for changes over time.

IBM warns that while agentic AI is the defining narrative of 2025, hype often outpaces realistic expectations. Many “agents” today are still narrow and constrained by permissions, memory, and safety checks. IBM

Key Breakthroughs: Memory, Reasoning, Tool Use

For AI agents to work reliably, several technical advances had to mature:

- Long-term memory and context persistence: Agents must remember not only the immediate prompt context but user preferences, past actions, and stateful knowledge across sessions.

- Tool integration & API chaining: The ability to call external APIs, plugins, databases, or orchestrate sub-agents.

- Dynamic planning & reasoning: Rather than fixed prompt chains, agents need to adapt when things go off script.

- Safety, access control, and sandboxing: To prevent runaway behavior, agents must operate within boundaries and under user oversight.

Microsoft, for instance, is pushing the concept of the “open agentic web” — a future in which agents built by multiple parties can interoperate. The Official Microsoft Blog

Anthropic’s new Skills capability is a real example: agents bundled with domain-specialized logic (e.g. Excel processing, brand guidelines) that can be composed and deployed across Claude environments. The Verge

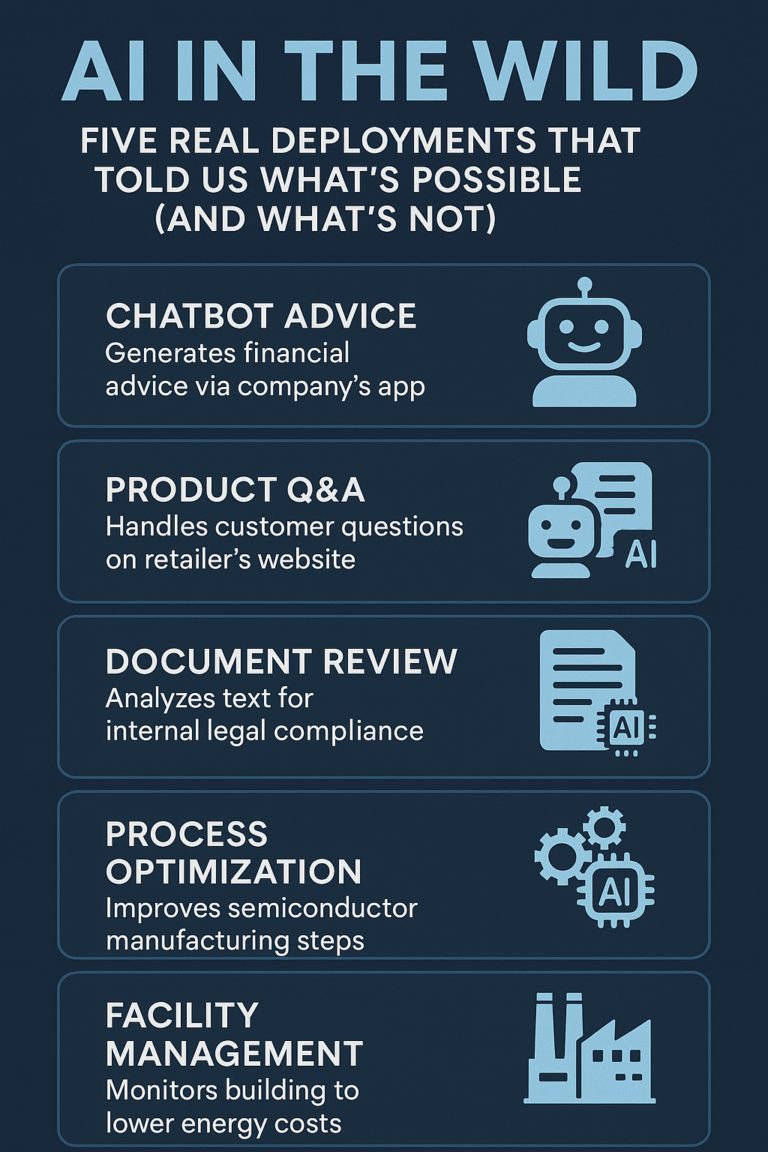

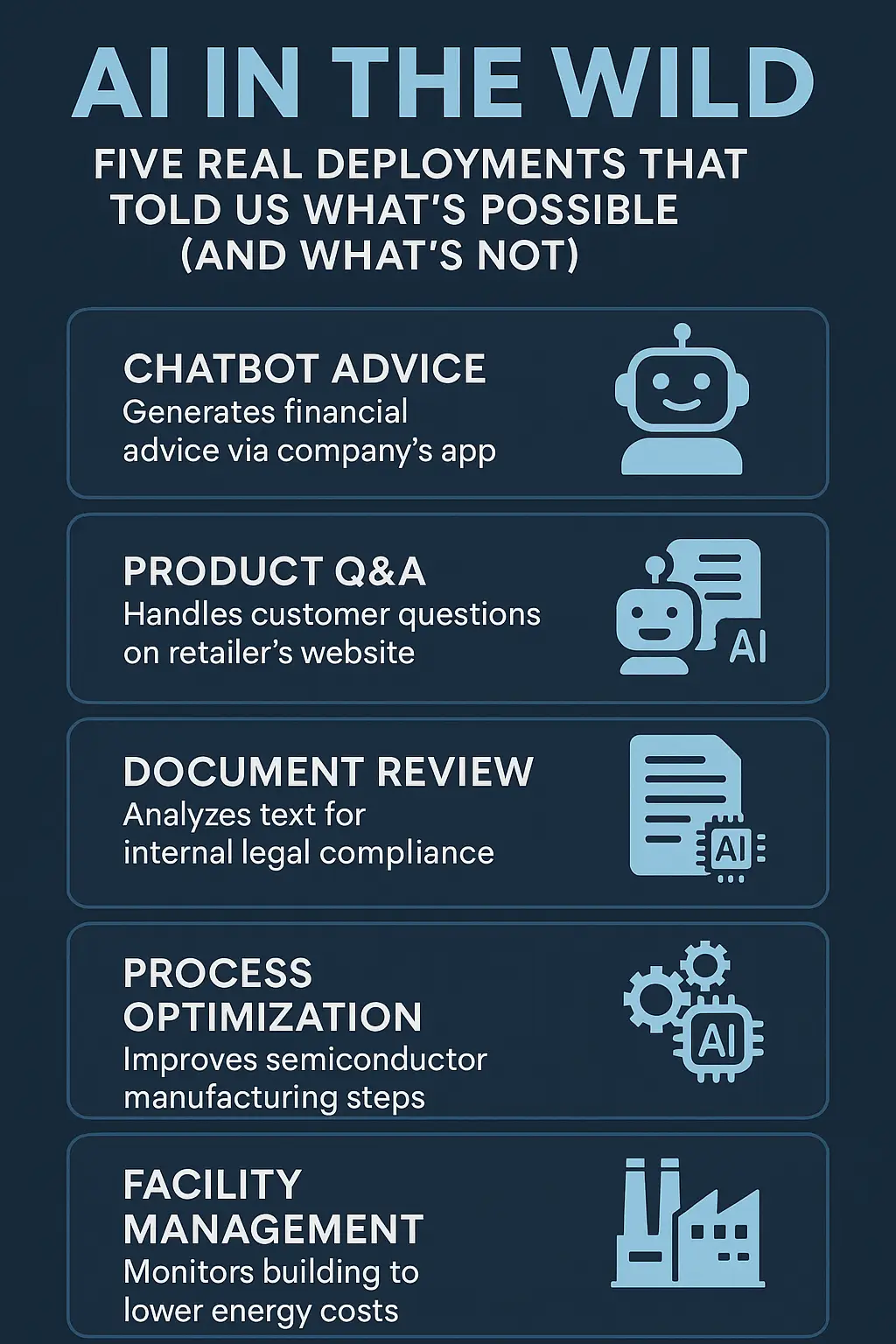

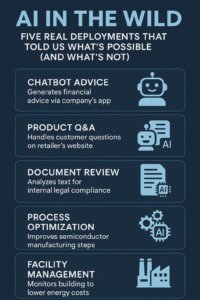

Use Cases Now in Motion

Here are sectors where agents are already making an impact:

Sector / Use Case | Agent Behavior | Real Example |

Productivity & Office Work | Automate document generation, spreadsheet workflows, email follow-ups | Claude Skills for Excel, or OpenAI AgentKit being used internally at companies The Verge+1 |

E-commerce / Retail Ops | Monitor inventory, coordinate ad campaigns, schedule logistics | Walmart is rolling out tools that assist associates with translation, task management, and operations via AI-driven assistants. Walmart Corporate News and Information |

Creative & Media | Agents that plan, shoot, edit, schedule content across platforms | YouTube integrating Veo 3 into Shorts, as well as creative automation across media pipelines. blog.youtube |

Smart Devices / OS | The OS or device “assistant” becomes proactive — anticipating tasks, auto-configuring, etc. | In Windows 11, Microsoft is expanding Copilot’s role, now allowing “Hey Copilot” voice triggers and active tasks like restaurant bookings via the desktop. Reuters+1 |

Gradually, AI agents will become embedded in apps and operating systems as functional companions—not just assistants.

Opportunities & Risks for Businesses

Opportunities

- Huge productivity gains by automating complex workflows.

- More personalized user experiences as agents tailor themselves to individual or organizational styles.

- Competitive differentiation by embedding “agentic layers” in existing software.

- New product models: agents as a subscription, “agent marketplaces,” or agent composition as a service.

Risks & Challenges

- Trust & explainability: As agents act autonomously, users need clarity on why decisions were made.

- Security & access control: Agents touching APIs or data must be strictly controlled.

- Bias & drift: Without constant monitoring, agents may deviate or reflect skewed data.

- Regulation & accountability: If an agent performs a harmful task, who is liable — the user, the developer, or the AI vendor?

The Future: From Assistants to Co-Pilots

Over time, agents will evolve from narrow function modules to full “co-pilots” — systems that deeply integrate with users’ workflows, personalities, and preferences. Key future traits might include:

- Seamless switching between modes (research, writing, planning)

- Multi-modal reasoning (text, vision, code, video)

- Inter-agent collaboration (specialist agents teaming up)

- Self-improvement and overnight learning within guardrails

But the transition must be safe, transparent, and human-aligned.

Conclusion

We stand at a turning point in AI: not just smarter models, but smarter actors. As agents emerge in work, creativity, OS-level assistants, and enterprise systems, the next 3–5 years will define standards for control, trust, and integration. Businesses that begin exploring agentic architectures now will have a head start — but the responsibility to build ethically, safely, and transparently is stronger than ever.