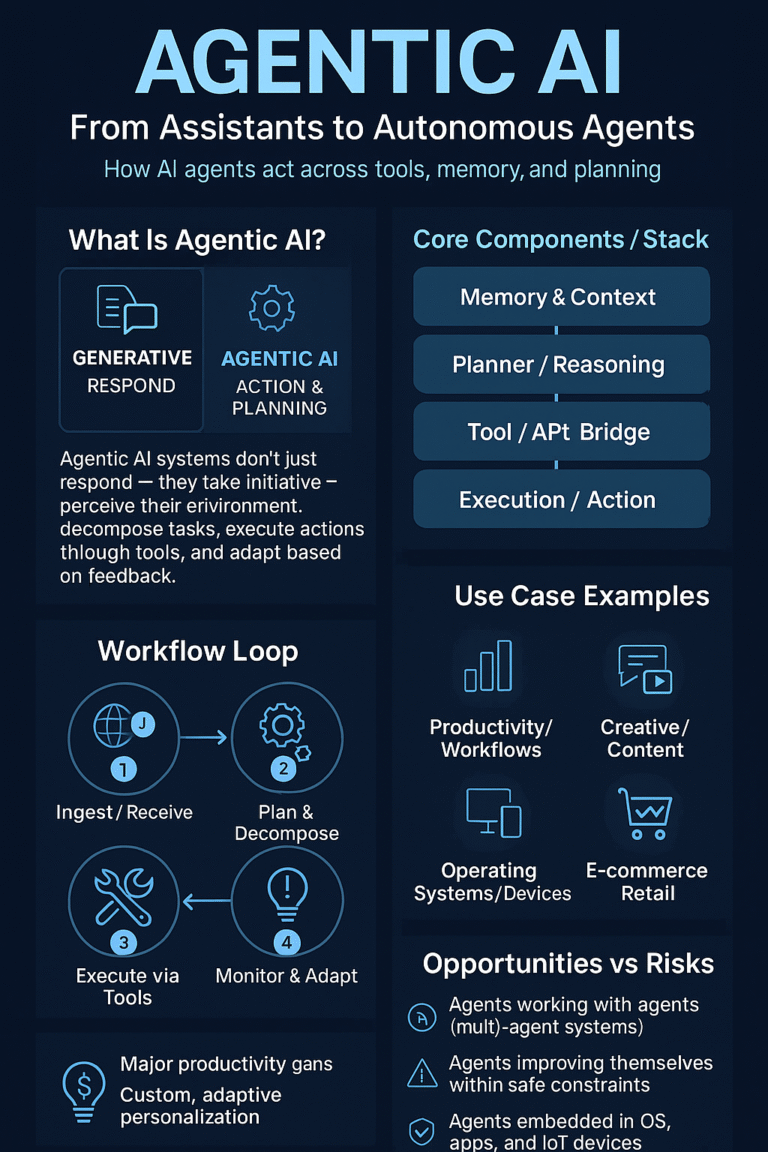

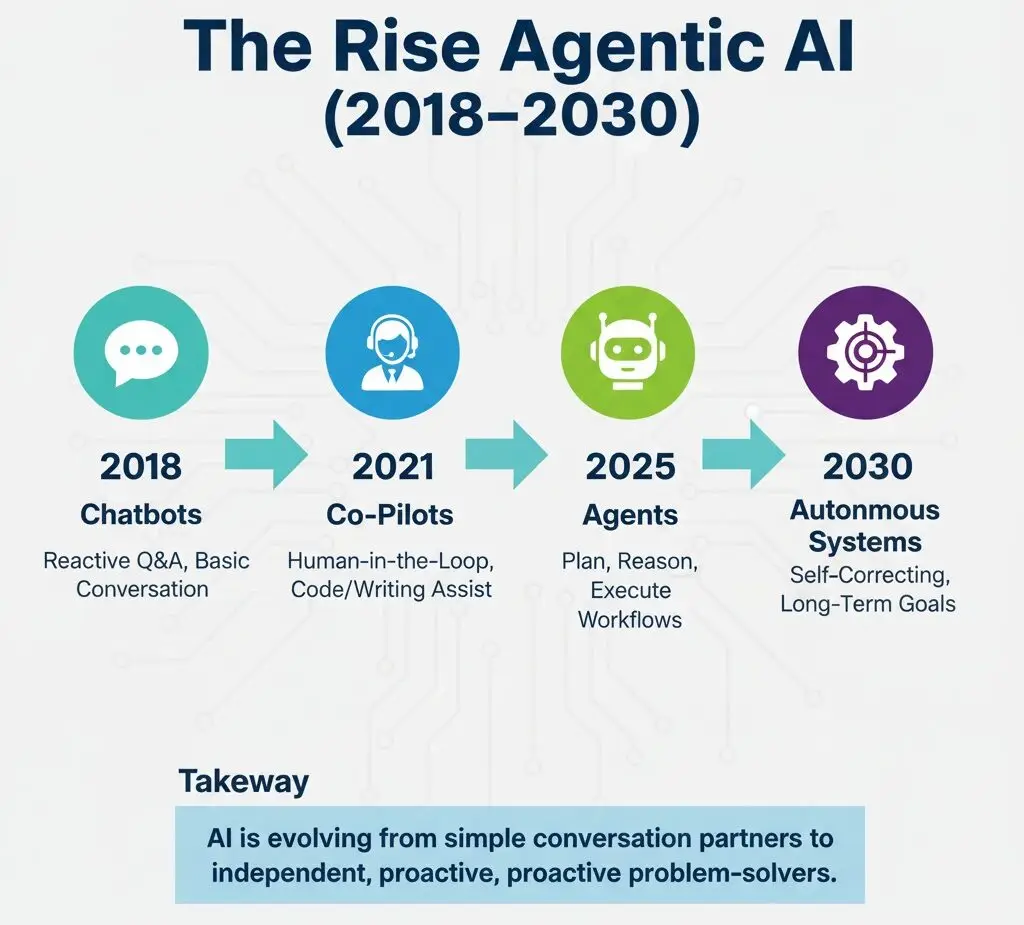

The Rise of Agentic AI: From Chatbots to Autonomous Systems

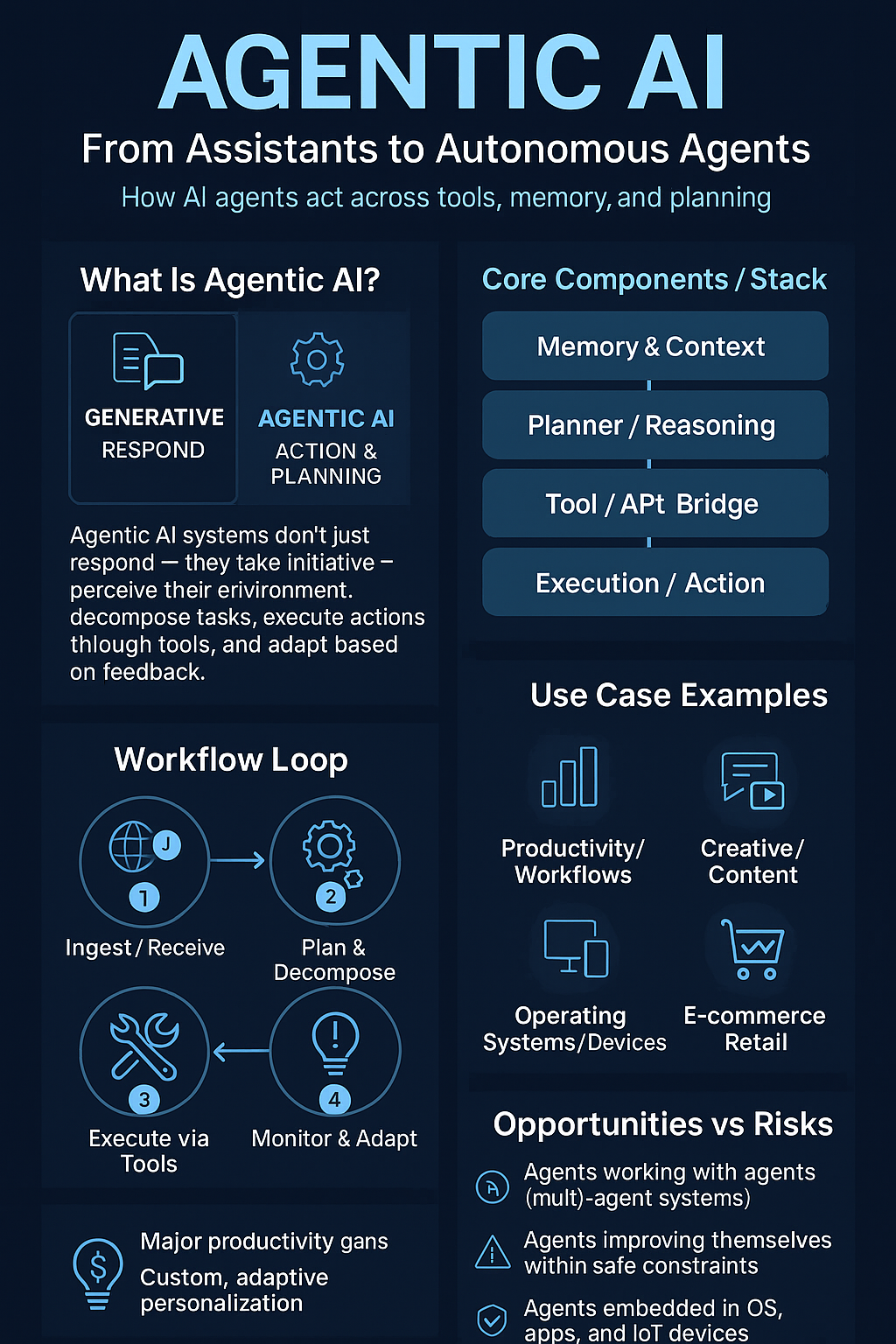

Artificial intelligence is shifting from reactive chatbots to proactive agents. The year 2025 marks a turning point: AI is no longer just generating answers, it’s planning, reasoning, and executing workflows. This is the era of agentic AI.

🔹 What Is Agentic AI?

Agentic AI refers to systems that don’t just respond — they act with autonomy. Unlike chatbots, which only generate one-off answers, agents:

-

Plan multi-step tasks.

-

Adapt based on outcomes.

-

Execute without constant human prompts.

Anthropic’s Claude Sonnet 4.5 sustaining 30+ hours of reasoning, or AutoGen enabling multi-agent conversations, are early examples of this shift.

🔹 Why It Matters Now

-

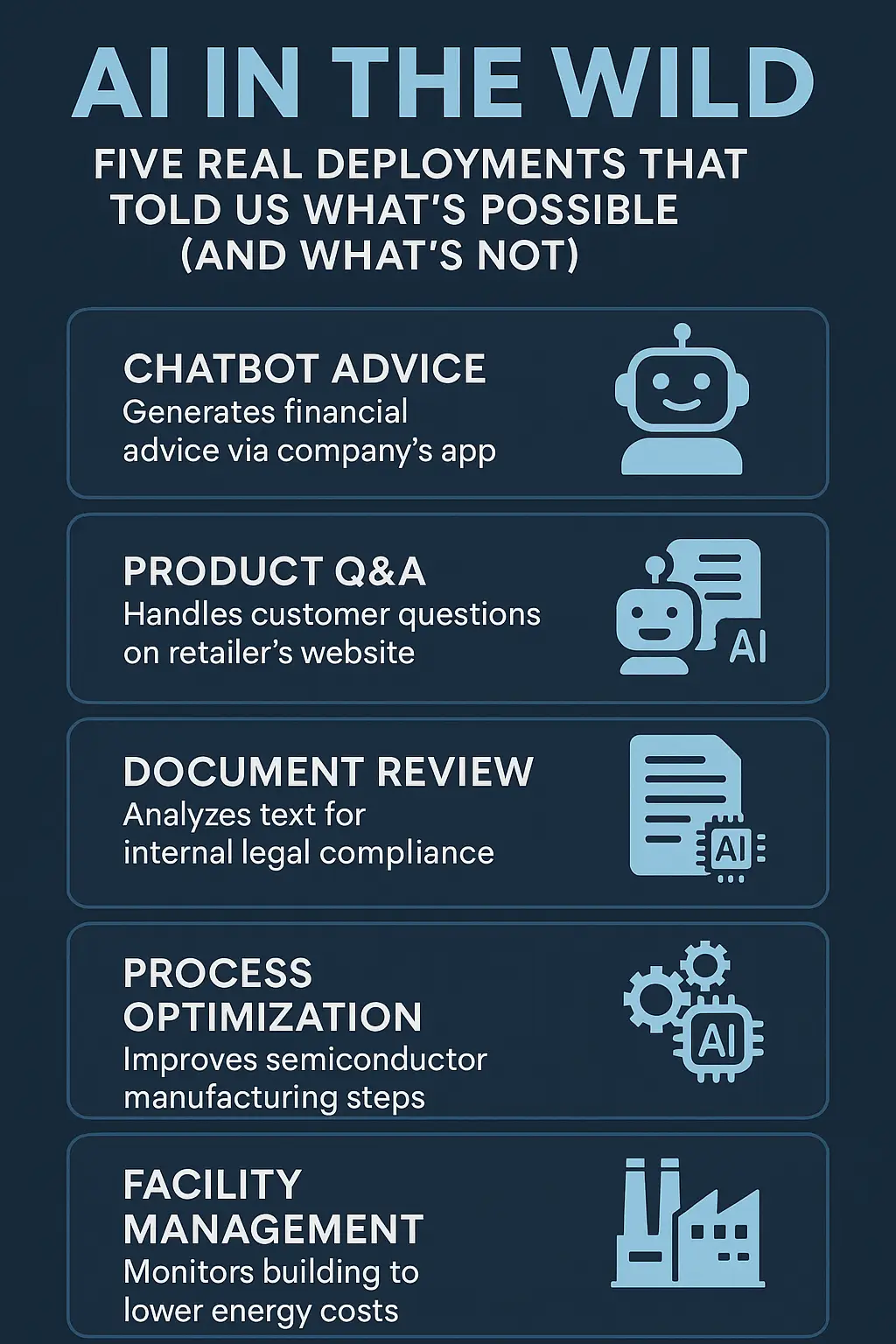

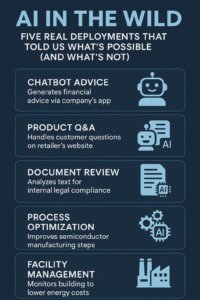

Productivity Gains → Agents can handle entire coding cycles, research reports, or simulations with minimal input.

-

Reduced Human Oversight → Less “prompt babysitting,” more true delegation.

-

Risks Magnified → Autonomy means errors can compound faster, with greater real-world consequences.

-

Ecosystem Growth → Frameworks like LangChain, CrewAI, and AutoGen are maturing, giving developers plug-and-play agent tools.

🔹 Challenges Ahead

-

Robustness: Agents still stumble in ambiguous or adversarial scenarios.

-

Transparency: How does an agent reach a decision? Black-box reasoning remains a challenge.

-

Control: When should a human step back in? Oversight mechanisms are still evolving.

-

Safety: Misaligned goals in autonomous agents could create high-stakes failures.

🔹 Looking Ahead to 2030

-

Hybrid Models: Expect “human-in-the-loop” oversight for sensitive domains like healthcare and law.

-

Vertical Specialization: Agents trained for finance, medicine, or robotics will outpace general-purpose ones.

-

New Benchmarks: Long-horizon, adaptive tests will replace static benchmarks like MMLU.

-

Ethics & Regulation: Policymakers will face pressure to set limits on what autonomous AI can do without human approval.